Researchers at the CDS have developed a novel software platform from which apps and algorithms can intelligently track and analyze video feeds from cameras spread across cities. Such analysis is not only useful for tracking missing persons or objects, but also for “smart city” initiatives such as automated traffic control.

Many cities worldwide have set up thousands of video cameras. Machine learning models can scour through the feeds from these cameras for a specific purpose ‒ tracking a stolen car, for example. These models cannot work by themselves; they have to run on a software platform or “environment” (somewhat similar to a computer’s operating system). But existing platforms are usually set in stone, and don’t offer much flexibility to modify the model as the situation changes, or test new models over the same camera network.

There has been a lot of research on increasing the accuracy of these models, but sufficient attention has not been paid to how you make the model work as part of a larger operation. To address this gap, DREAM lab has developed a software platform called Anveshak. It can not only run these tracking models efficiently, but also plug in advanced computer vision tools and intelligently adjust different parameters ‒ such as a camera network’s search radius ‒ in real-time.

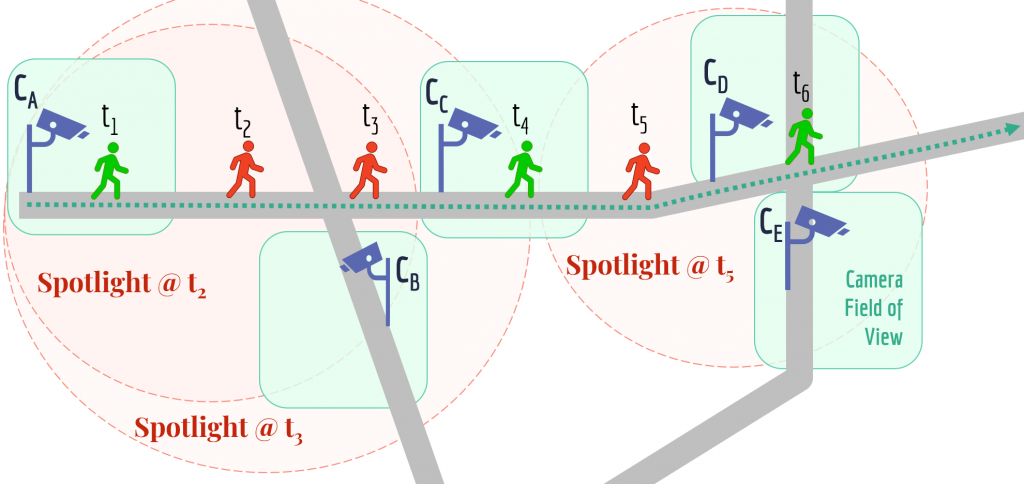

In their recently published paper, they show how Anveshak can be used to track an object (like a stolen car) across a 1,000-camera network. A key feature of the platform is that it allows a tracking model or algorithm to focus only on feeds from certain cameras along an expected route, and tune out other feeds. It can also automatically increase or decrease the search radius or “spotlight” based on the object’s last known position.

The platform is suitable even for resource-constrained environments, where the amount of computing power available is not really negotiable on the fly. Anveshak enables the tracking to continue uninterrupted even if the resources ‒ the type and number of computers that analyze the feeds ‒ are limited. For example, if the search radius needs to be increased and the computer becomes overwhelmed, the platform will automatically start dropping the video quality to save on bandwidth, while continuing to track the object.

In 2019, as part of a winning entry for the IEEE TCSC SCALE Challenge award, DREAM lab showed how Anveshak could potentially be used to control traffic signals and automatically open up “green routes” for ambulances to move faster. The platform used a machine learning model to track an ambulance on a simulated Bengaluru road network with about 4,000 cameras. It also employed a “spotlight tracking algorithm” to automatically restrict which feeds needed to be analyzed based on where the ambulance was expected to go.

DREAM lab is also working on incorporating privacy restrictions within the platform. For eg. allow analytics that track vehicles, but not analytics that track people, or analytics that track adults but not children. They are also working on ways to use Anveshak to track multiple objects at the same time.

REFERENCE:

A. D. Khochare, A. Krishnan and Y. Simmhan, “A Scalable Platform for Distributed Object Tracking across a Many-camera Network,” in IEEE Transactions on Parallel and Distributed Systems, doi: 10.1109/TPDS.2021.3049450, Copyright IEEE